1 | Word2Vec学习笔记,附带Tensorflow的CBOW实现。 |

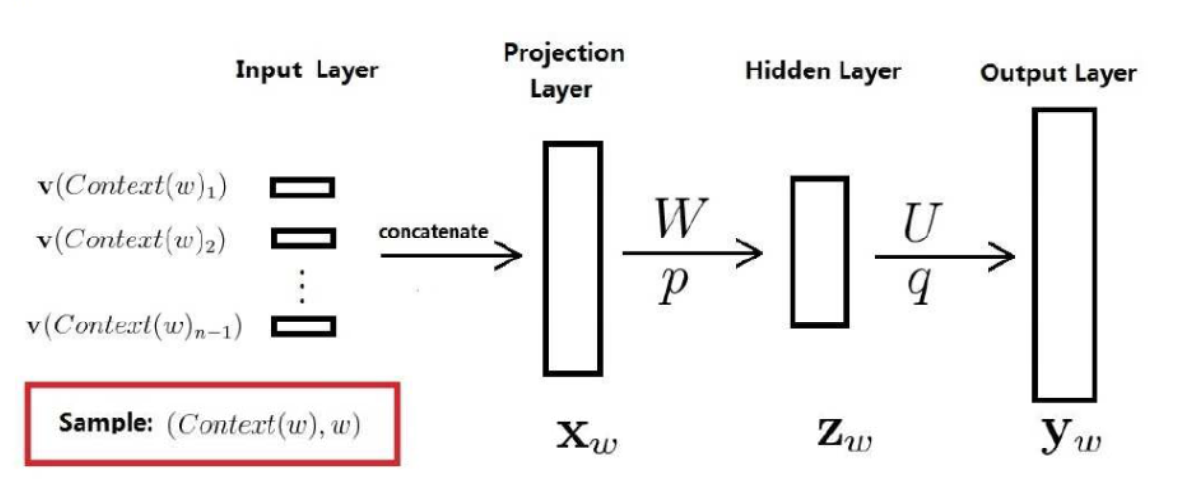

神经概率语言模型

- 词向量:$v(w) \in R^m$

- $m$是词向量的维度,通常是$10^1\sim 10^2$的量级。

- 神经网络参数:$W \in R^{n_h×(n-1)m}$,$p \in R^{n_h}$,$U\in R^{n_h×N}$,$q \in R^N$

- $n$:上下文词数,通常不超过5。

- $n_h$:隐层的维度,用户指定,通常是$10^2$的量级。

- $N$:语料的大小,通常是$10^4\sim 10^5$的量级。

- 通过神经网络的反向传播,更新$v(w)$,最终获得w2v。

- $x_w$是各词向量之和。

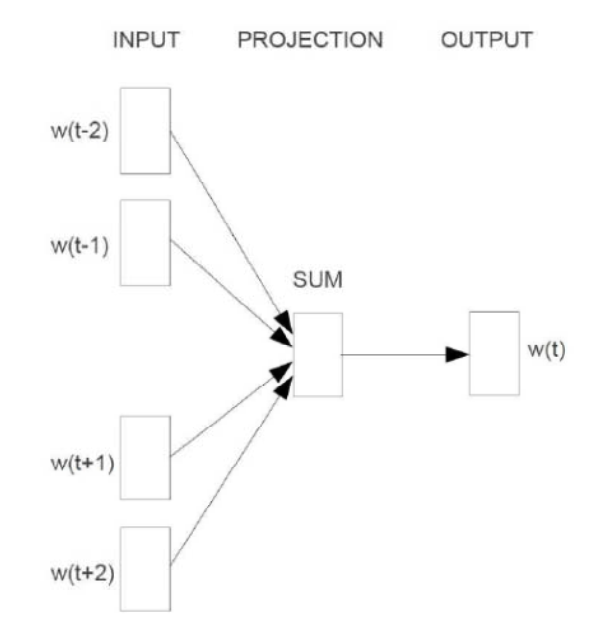

CBOW

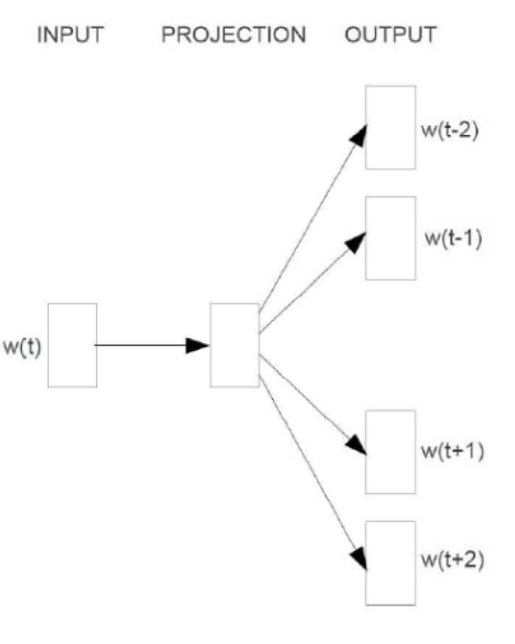

Skip-Gram

如何解决类别过多的问题?

Corpus的数量是$N$,那么分类的类别即$N$。直接拿去做这么多个类的分类:输出层的参数$U\in R^{n_h×N}$,太大。解决的思路有两个:

- 1.将一次的多分类转换为多次的二分类:Hierachical

Softmax。这样,针对每一个分类器有一个参数向量,参数量是:$log_2N×m$。 - 2.每个词向量对应一个参数向量,参数量稍有下降,是:$N×m$,但每次训练只更新其中的一部分,而不是全部:负采样。

Hierachical Softmax的梯度计算(CBOW)

- $p^w$:从根结点出发到达$w$对应叶子结点的路径。

- $l^w$:路径$p^w$中包含结点的个数。

- $p_1^w,p_2^w,…,p_{l^w}^w$:路径$p^w$中的$l^w$个结点,其中,$p^w_1$表示根结点,$p_{l^w}^w$表示词$w$对应的结点。

- $d_2^w,…,d_{l^w}^w \in {0,1}$:词$w$的Huffman编码,由$l^w-1$位编码组成(根结点不编码),$d_j^w$表示路径$p^w$中第$j$个结点对应的编码。

- $\theta_1^w,\theta_2^w,…,\theta_{l^w-1}^w \in R^m$:路径$p^w$中非叶子结点对应的向量,$\theta_j^w$表示路径中$p^w$中第$j$个非叶子结点对应的向量。

对于词典$D$中的任意词$w$,Huffman树中必存在一条从根节点到词$w$对应结点的唯一的路径$p^w$。路径$p^w$上存在$l^w-1$个分支,每个分支即一次二次分类,每次分类产生一个概率,将所有这些概率乘起来,就是所需的$p(w|Context(w))$。

$$p(w|Context(w)) = \prod_{j=2}^{l^w}p(d_j^w|x_w,\theta^w_{j-1})$$

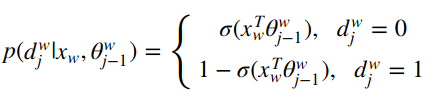

其中:

基于负采样的模型(CBOW)

目的:提高训练速度,改善所得词向量的质量。

不使用Huffman树,使用随机负采样。

什么是负样本:对于给定的$Context(w)$,词$w$是一个正样本,其他词是负样本。

负样本子集:$NEG(w)$。

给定正样本:$(Context(w),w)$,希望最大化:$g(w) = \underset{u \in {w} \cup NEG(w)}{\prod}p(u|Context(w))$

其中:

$L^w(\tilde w)$代表词$\tilde w$是否就是词$w$。

$\theta^u \in R^m$表示词$u$对应的辅助参数向量。

Tensorflow实现

下面的代码实现的是全类别的softmax的方法,没有使用负采样或者hierachical softmax。

1 | # These are all the modules we'll be using later. Make sure you can import them |

1 | Data size 17005207 |

1 | print(words[:50]) |

1 | ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against', 'early', 'working', 'class', 'radicals', 'including', 'the', 'diggers', 'of', 'the', 'english', 'revolution', 'and', 'the', 'sans', 'culottes', 'of', 'the', 'french', 'revolution', 'whilst', 'the', 'term', 'is', 'still', 'used', 'in', 'a', 'pejorative', 'way', 'to', 'describe', 'any', 'act', 'that', 'used', 'violent', 'means', 'to', 'destroy', 'the'] |

1 | vocabulary_size = 50000 |

1 | Most common words (+UNK) [['UNK', 418391], ('the', 1061396), ('of', 593677), ('and', 416629), ('one', 411764)] |

CBOW 实现

1 | data_index = 0 |

1 | data: ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against', 'early', 'working', 'class', 'radicals', 'including', 'the'] |

1 | batch_size = 128 |

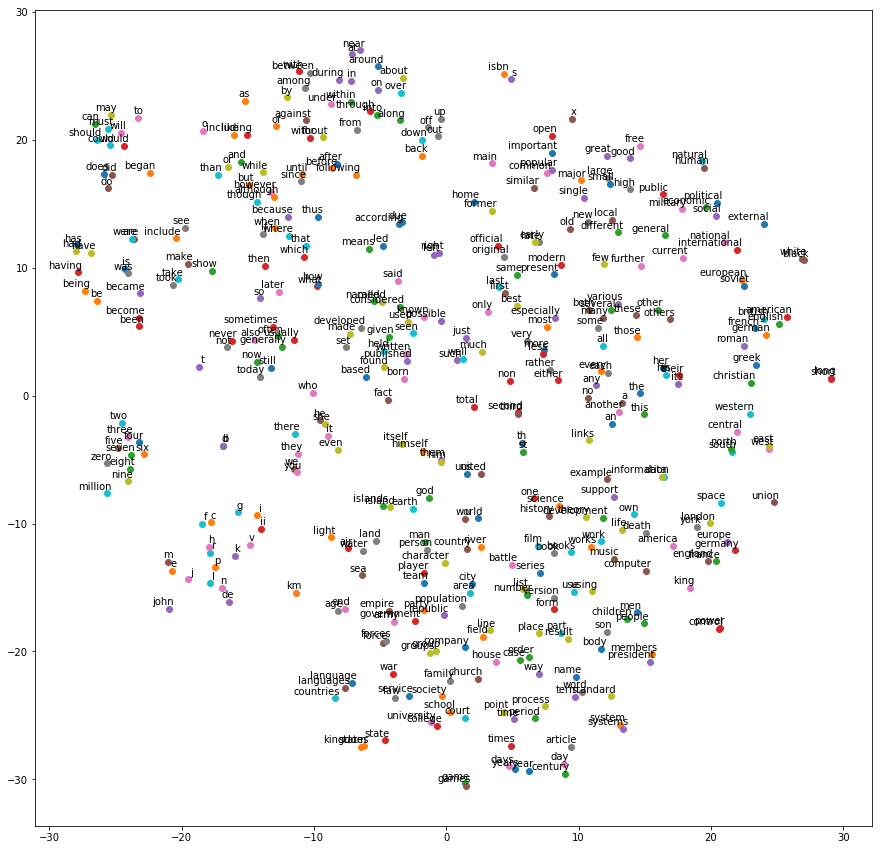

1 | with tf.Session(graph=graph) as session: |

1 | WARNING:tensorflow:From <ipython-input-13-a25f3f405467>:2: initialize_all_variables (from tensorflow.python.ops.variables) is deprecated and will be removed after 2017-03-02. |